Crater Detection System Project for Space Exploration

Project Context

As part of an elective subject called "Machine Learning in Robotics and Edge Devices for Space Exploration," our team embarked on developing a highly efficient camera system aimed at crater detection to minimize network load. This proof-of-work project harnesses the power of custom object detection machine learning models, installed on a Raspberry Pi Pico, and integrates with a custom web application for real-time filtered footage visualization.

Technical Stack

- Machine Learning: Leveraged Google Colab for collaborative coding and model training, PyTorch for building the object detection model, and Numpy for handling numerical operations.

- Web Application: Developed using React for the frontend, Node.js for the backend, and Tailwind.css for styling, ensuring a responsive and user-friendly interface.

- Embedded Development: Utilized TinyML for deploying machine learning models on low-power devices and Pico4ML for specifically targeting the Raspberry Pi Pico platform.

System Overview

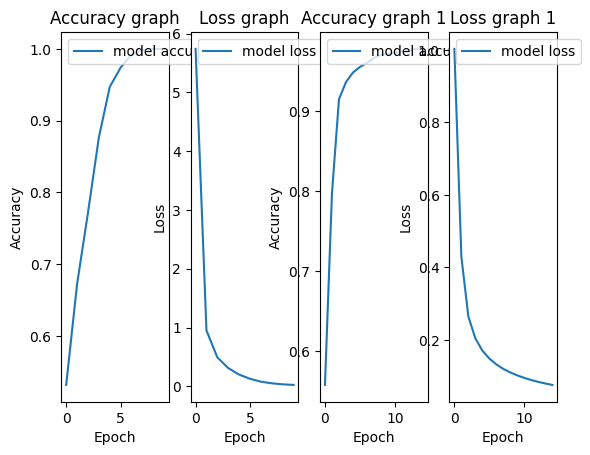

Machine Learning Model

The core of the project is a custom object detection model, trained to identify craters from camera feed data. Using Google Colab, PyTorch, and Numpy, the model was developed, trained, and successfully tested before being pruned to decrease its size for efficient deployment on the Raspberry Pi Pico. This optimization was crucial for running the model in edge devices with limited computing resources.

Embedded Implementation

The Raspberry Pi Pico, equipped with Pico4ML, runs the optimized machine learning model to process live camera feeds directly on the device. The system captures images, which are then processed by the model to detect and filter only the footage containing the desired shapes (craters).

Data Handling and Image Processing

A Python script, running on the Raspberry Pi Pico, handles serial communication to receive image data. This script:

- Reads data from the camera through a serial connection.

- Processes the data to create BMP images, storing them in dynamically created directories based on the current date and time for organized storage and easy retrieval.

- Utilizes the Pillow library to convert raw image data into BMP format, allowing for compatibility with the web application for display.

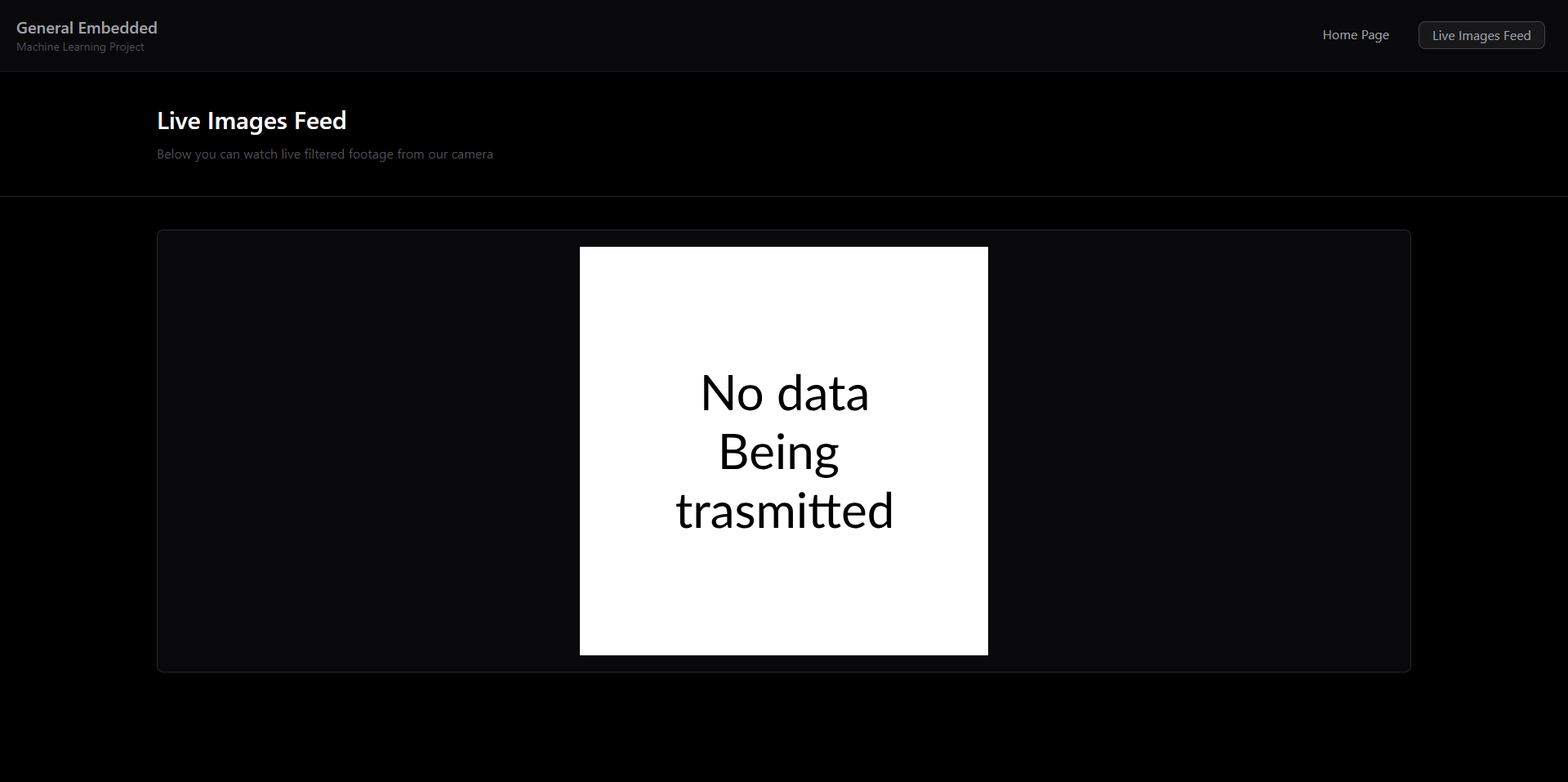

Web Application

The web application displays live, filtered footage of detected craters to users. It was developed using React, Node.js, and Tailwind.css, creating a responsive and interactive interface. The application fetches the processed images stored by the Python script and displays them in real-time.

Challenges and Solutions

- CORS Issues: Encountered during the development of the web application, resolved by configuring appropriate headers in the Node.js backend to allow cross-origin requests.

- Optimal Refreshing: Implementing efficient mechanisms in the web application to refresh the feed of locally stored files, ensuring real-time updates without overloading the server or client browser.

Project Significance

This project demonstrates the feasibility of deploying machine learning models on edge devices for critical applications like crater detection in space exploration. By processing data directly on the device and only transmitting relevant information, we significantly decrease the network load, making the system more viable for remote operations where bandwidth is a precious commodity.

Future Work

The successful deployment of this project opens avenues for further research and development, including:

- Enhancing the model's accuracy and efficiency through advanced machine learning techniques.

- Expanding the system's capabilities to detect other relevant features in the exploration domain.

- Improving the web application's functionality to include features like real-time alerts and detailed analytics.

Acknowledgments

This project was made possible by the collaborative efforts of our team, the guidance of our instructors, and the resources provided by the elective course on Machine Learning in Robotics and Edge Devices for Space Exploration.